Introduction to Omniscient Rendering

Omniscient rendering represents a groundbreaking approach in the field of computer graphics and game development, positing a future where game engines can pre-calculate and understand all potential states of every object within a virtual environment. This methodology extends beyond traditional rendering techniques, which typically focus on depicting the visual state of a game at individual moments. Omniscient rendering seeks to encapsulate the entirety of an object’s possible states over time, essentially creating a “four-dimensional” model of the game world.

Interactions between user and object, and object and object, form the basis of change over time for objects, essentially creating the 4th dimension of each object. Once each 3D model are available game levels can be created. Instances of the models are created, and the relationships to all other existing objects are calculated forward in time based on possible interactions. The resulting charges are the 4D timelines of the instances of each model. The calculating algorithm forms the pre-rendering engine.

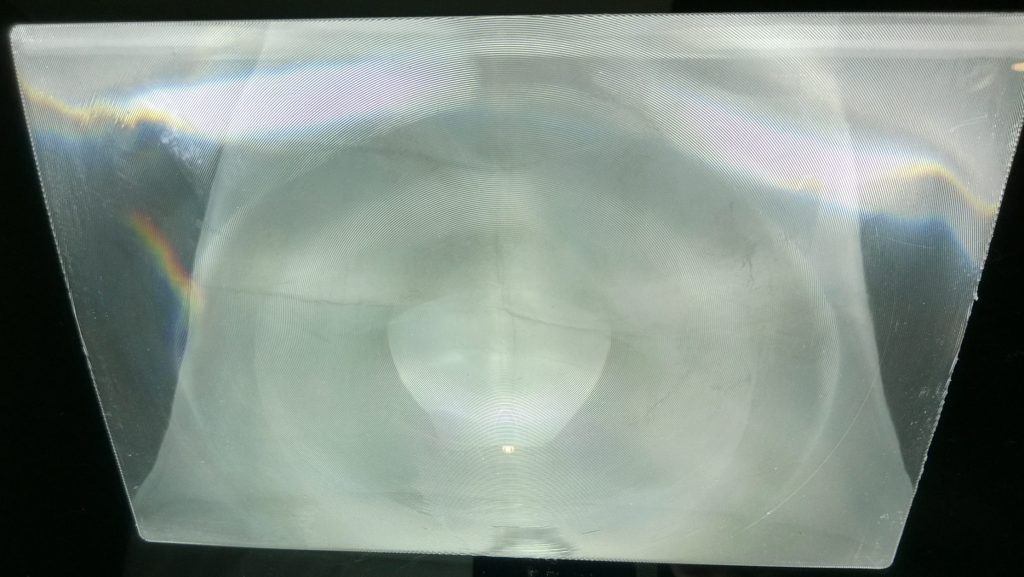

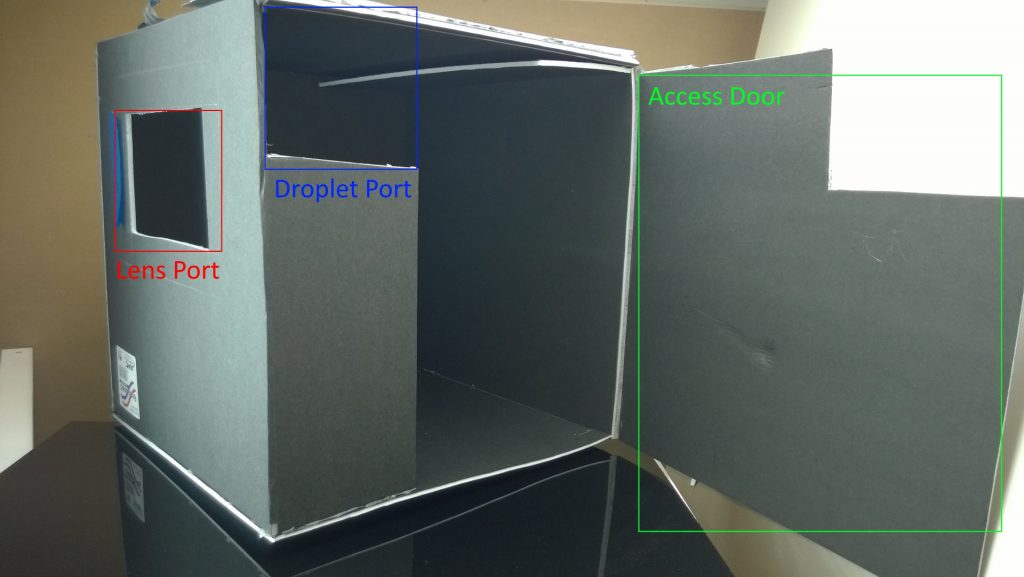

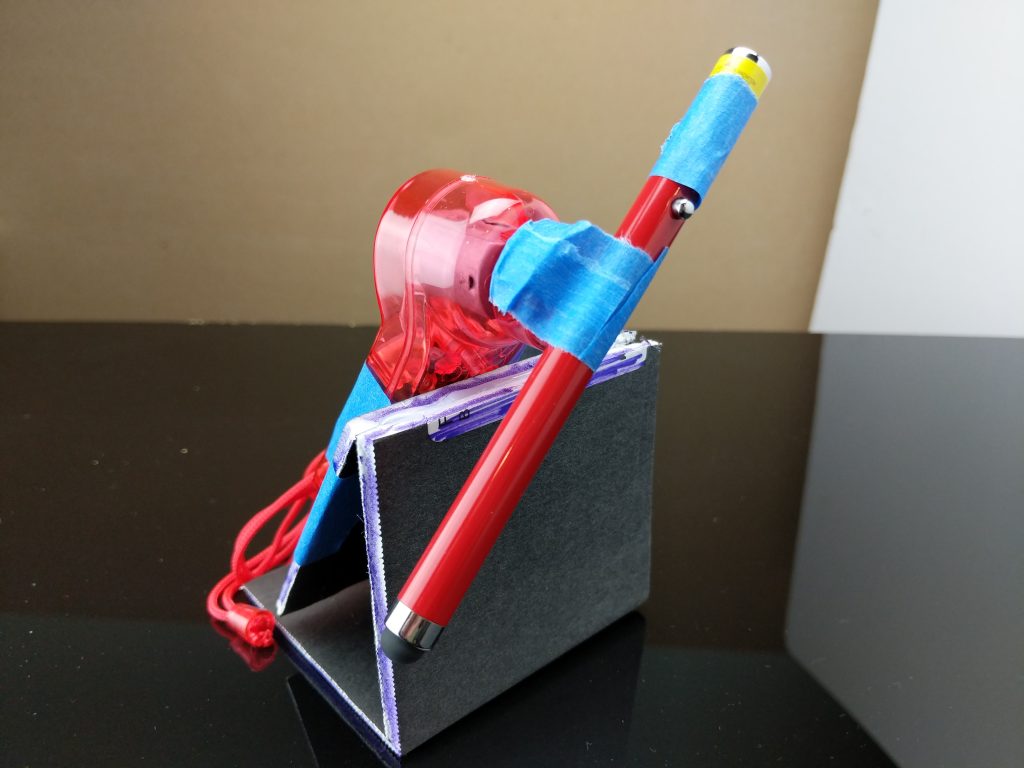

Drawing on the idea of visualizations of higher dimensions into 2D, 3D and 4D, every point in time of a 3D model can be pre-rendered and projected into a 2D visualization from every possible point of view or POV. Each visualization will be linked to all other visualizations in the POV. Every visualization, the 5D sprite, will then train an algorithm to render a 2D POV containing all sprites. The resulting “large sprite model” or “large object model” LOM will accept user input, both movement and interaction, to render the next POV or frame of display output. The LOM becomes the game engine.

Technical Foundations

4D Spatiotemporal Modeling

Omniscient rendering necessitates a robust understanding of 4D spatiotemporal modeling. In this context, the fourth dimension (time) is integrated with the traditional three spatial dimensions to create a comprehensive model that can evolve. This integration allows the rendering system to not only display the current state of objects but also predict their future states based on their historical and potential interactions within the game world. Such models are akin to a timeline for each object, detailing its past, present, and future transformations.

Temporal Coherence and Predictive Rendering

A critical aspect of omniscient rendering is maintaining temporal coherence, ensuring that object states are consistent over time. Predictive rendering techniques come into play here, where algorithms forecast the future states of objects based on predefined rules or learned behaviors. This prediction extends beyond mere animation, encompassing changes due to physical interactions, environmental factors, and player inputs, providing a seamless and dynamic gaming experience.

Computational Methods in 4D Rendering

Implementing omniscient rendering involves complex computational methods that can process and manage the 4D data efficiently. Techniques like 4D ray tracing, which extends traditional 3D ray tracing by considering time as a ray’s fourth dimension, are pivotal. This method allows for the simulation of light and shadows in a temporally consistent manner considering spatial relationships with other objects, reflecting the changing states of objects and environments over time.

Data Storage and Access

The data storage and access mechanisms for omniscient rendering are paramount, as they must handle vast datasets representing the different states of each object through time. Efficient data structures and databases are required to store these 4D datasets, enabling quick retrieval and manipulation to render the game world in real time. Techniques from big data and time-series databases can be adapted to manage this spatiotemporal data effectively.

Integration with Game Engines

Integrating omniscient rendering into existing game engines poses significant challenges, requiring fundamental changes to how game engines manage and render objects. The game engine must be capable of processing the 4D data, rendering it according to the player’s interactions and the game’s internal logic. This integration would likely necessitate the development of new engine architectures or extensive modifications to existing ones to support the complex data and computational demands of omniscient rendering.

Large Object Models

Conceptual Framework of LOMs

Large Object Models (LOMs) in the context of omniscient rendering are akin to the Large Language Models (LLMs) used in AI for natural language processing. LOMs, however, are designed to encapsulate the vast array of states and interactions possible within a 3D environment. These models are structured to comprehend and predict the behavior and evolution of objects over time, integrating vast datasets that represent the various potential states and interactions of each object in the game world.

Data Integration and Training

Integrating diverse data types and sources is crucial for training LOMs effectively. This includes spatial data, temporal sequences, interaction patterns, and physical properties of objects. The training process involves not only the assimilation of static and dynamic attributes of objects but also understanding the complex relationships and dependencies among them. Advanced machine learning techniques, such as deep learning and reinforcement learning, are employed to train these models, enabling them to predict future states and interactions based on past and present data.

Scalability and Performance

Scalability is a significant consideration in designing LOMs, as they must handle the complexity and volume of data in a game environment efficiently. This involves optimizing the models to work with high-performance computing resources, ensuring they can process and render the game world in real-time. Performance optimization also includes streamlining the data retrieval and processing mechanisms to minimize latency and maximize the responsiveness of the rendering engine. Optimization could include excluding physically impossible POVs for the final render.

Integration with Game Development Workflow

LOMs must be seamlessly integrated into the game development workflow to be practical. This integration requires tools and interfaces that allow game designers and developers to interact with the model, input new data, and tweak the system to achieve the desired outcomes. The development of such tools is crucial for enabling the creative process in game design, allowing for the iterative development and testing of game scenarios and interactions within the omniscient rendering framework.

Application in Game Development

Enhanced Realism and Interactivity

Omniscient rendering can significantly enhance the realism and interactivity of game environments. By pre-calculating the potential states of objects and their interactions, games can present a more dynamic and responsive world, or a simplified world. Physics engine complexity, and thus the number of possible object states, would have a large impact on game optimization. Players’ actions could have more nuanced and far-reaching consequences, as the game can render outcomes based on a comprehensive simulation of the game world’s physics and logic. This dynamism requires advances in machine learning computation. This depth of interaction creates a more immersive experience, as the environment reacts in complex ways to player decisions and actions.

Predictive AI and Dynamic Storytelling

In game development, AI behavior and narrative progression can be transformed by omniscient rendering. AI characters can use the pre-calculated state trajectories to make decisions that are more nuanced and contextually appropriate, leading to more lifelike and unpredictable interactions. Additionally, dynamic storytelling can evolve more organically, as the narrative can adapt to the multitude of possible player actions and environmental changes, creating a personalized and engaging story experience. This is an area where game development would impact game storage and delivery optimization.

Advanced Physics and Environment Simulation

Omniscient rendering allows for advanced physics simulations, where every object’s interactions with the environment and other objects are calculated in detail over time. This can lead to more realistic simulations of destruction, weather effects, and material transformations, enhancing the game’s visual and interactive fidelity. Environmental changes can persist or evolve logically based on the game’s internal timeline, providing a consistent and evolving world.

Optimization of Resources and Loading Times

While omniscient rendering is computationally intensive, it also offers opportunities for optimization. By pre-calculating object states, games can reduce the need for real-time calculations, potentially decreasing loading times and improving performance. Resource management can be optimized by loading only the necessary data for the current and imminent game states, reducing memory overhead and processing requirements. Streaming delivery is simplified as combining 5D sprites into a single 2D frame would be fast on modern GPUs.

Challenges in Game Design and Player Experience

The implementation of omniscient rendering also poses challenges in game design, particularly in balancing player freedom with narrative coherence and computational feasibility. Designers must consider how to maintain engaging gameplay and story progression when player actions can lead to a vast range of outcomes. Moreover, ensuring that players perceive and understand the depth and impact of their actions in such a dynamically rendered environment is crucial for maintaining a satisfying and rewarding player experience. Platform games may be a better fit than open world games. Open world games, or randomly generated spaces, would have the additional overhead of rendering the 5D objects and their 5D sprites at load time.

Challenges and Considerations

Computational Resource Demands

One of the primary challenges of omniscient rendering is the immense computational resources required. The process of pre-calculating and storing the vast array of potential object states and interactions demands significant processing power and memory. Managing these resources efficiently, while maintaining real-time performance in the game, is a complex technical challenge that necessitates advances in both hardware and software.

Data Management and Storage

The sheer volume of data involved in omniscient rendering poses significant challenges in terms of data management and storage. Efficiently organizing, accessing, and modifying this data in real-time to reflect the dynamic nature of the game world is a daunting task. This requires the development of new data structures and algorithms that can handle high-dimensional data with temporal components, ensuring quick access and updates as the game progresses.

Real-Time Rendering and Latency

Maintaining real-time rendering performance in the face of omniscient rendering’s complexity is another major challenge. The system must rapidly access and render the appropriate state of the game world with minimal latency to ensure a smooth and responsive experience for the player. This involves optimizing the rendering pipeline and possibly redefining rendering algorithms to accommodate the 4D data structures used in omniscient rendering.

Integration with Existing Game Development Pipelines

Integrating omniscient rendering into existing game development pipelines can be challenging, as it may require significant changes to established workflows and tools. Developers must adapt to new ways of designing, testing, and interacting with game content, which could involve a steep learning curve and substantial shifts in development practices.

Future Perspectives on Large Object Models

Advances in Computing Technology

The future of omniscient rendering is closely tied to advancements in computing technology, particularly in the areas of processing power, memory capacity, and data storage solutions. As hardware continues to evolve, with faster processors and more efficient memory systems, the computational barriers to omniscient rendering will diminish. This will enable more sophisticated and detailed simulations, allowing for even more complex and dynamic game worlds.

AI and Machine Learning Integration

AI and machine learning will play a pivotal role in advancing omniscient rendering. As machine learning models become more sophisticated, they will enhance the ability of game engines to predict and render complex scenarios in real-time. This could lead to more adaptive and intelligent game environments that can react in nuanced ways to player actions and environmental changes, pushing the boundaries of interactive storytelling and gameplay.

Virtual and Augmented Reality Applications

Omniscient rendering has significant implications for virtual and augmented reality (VR/AR). With its ability to pre-calculate and render complex, dynamic environments, omniscient rendering could provide the foundation for highly immersive VR and AR experiences. These experiences would be characterized by their responsiveness and realism, offering users a seamless integration of virtual and real-world elements.

The Horizon of Game Design

Looking further ahead, omniscient rendering could fundamentally transform the landscape of game design and development. Game designers will have at their disposal a powerful tool for crafting intricate, living worlds that can evolve and respond to players in unprecedented ways. This could lead to a new genre of games where the narrative and gameplay are genuinely dynamic, shaped by an intricate web of potential outcomes and player choices.

References and Articles

Foundational Theories and Concepts

To understand the theoretical underpinnings of omniscient rendering, references to seminal works in computer graphics and temporal modeling are essential. Key texts like “Computer Graphics: Principles and Practice” provide a comprehensive overview of foundational concepts in 3D rendering and modeling, which are crucial for developing the 4D approaches used in omniscient rendering.

Recent Advances in Rendering Technologies

Articles and papers from leading industry conferences such as SIGGRAPH and GDC offer insights into the latest advances in rendering technologies. These resources can provide detailed case studies and technical descriptions of cutting-edge rendering techniques, including real-time global illumination, advanced shading models, and predictive algorithms that are relevant to omniscient rendering.

Machine Learning and Predictive Modeling

The integration of machine learning in game development is a rapidly evolving field, with significant research being conducted on predictive modeling and AI-driven simulation. Journals like “Artificial Intelligence” and “IEEE Transactions on Pattern Analysis and Machine Intelligence” regularly publish articles on the development of AI models capable of complex predictive behaviors, which are central to the concept of omniscient rendering.

Ethical and Societal Considerations

As omniscient rendering involves collecting and processing large volumes of data, including potentially sensitive user data, ethical considerations are paramount. Publications in the fields of technology ethics and digital privacy can provide valuable perspectives on the responsible use of predictive technologies in gaming and interactive media.

Technical Guides and Manuals

For practical insights into implementing omniscient rendering, technical guides and manuals from game engine developers like Unity and Unreal Engine can be invaluable. These resources often include detailed documentation on the capabilities and limitations of current rendering engines, as well as guides on integrating advanced rendering and AI features into game development projects.